|

Junlin Yang Hi, I'm Junlin Yang, a senior undergraduate student in the Department of Computer Science and Technology at Tsinghua University. I am proud to be joining Tsinghua as an incoming PhD student, advised by Bowen Zhou and Ning Ding. I have had the privilege of working as a research intern at The University of Hong Kong with Prof. Tao Yu, and at University of Illinois Urbana-Champaign with Prof. Hao Peng. Feel free to reach out if you are interested in my research, potential collaborations, or just a friendly chat! Email / Twitter / Bluesky / Github / Google Scholar |

|

ResearchI am particularly interested in Machine Learning, with a focus on NLP , Reinforcement Learning and Multimodal Learning. My research has been focused on building embodied agents, especially computer agents, that can excel in solving human tasks and collaborating effectively with people. Recently, I am interested in developing scalable methods towards general, self-evolving reasoning intelligence. * indicates equal contribution |

|

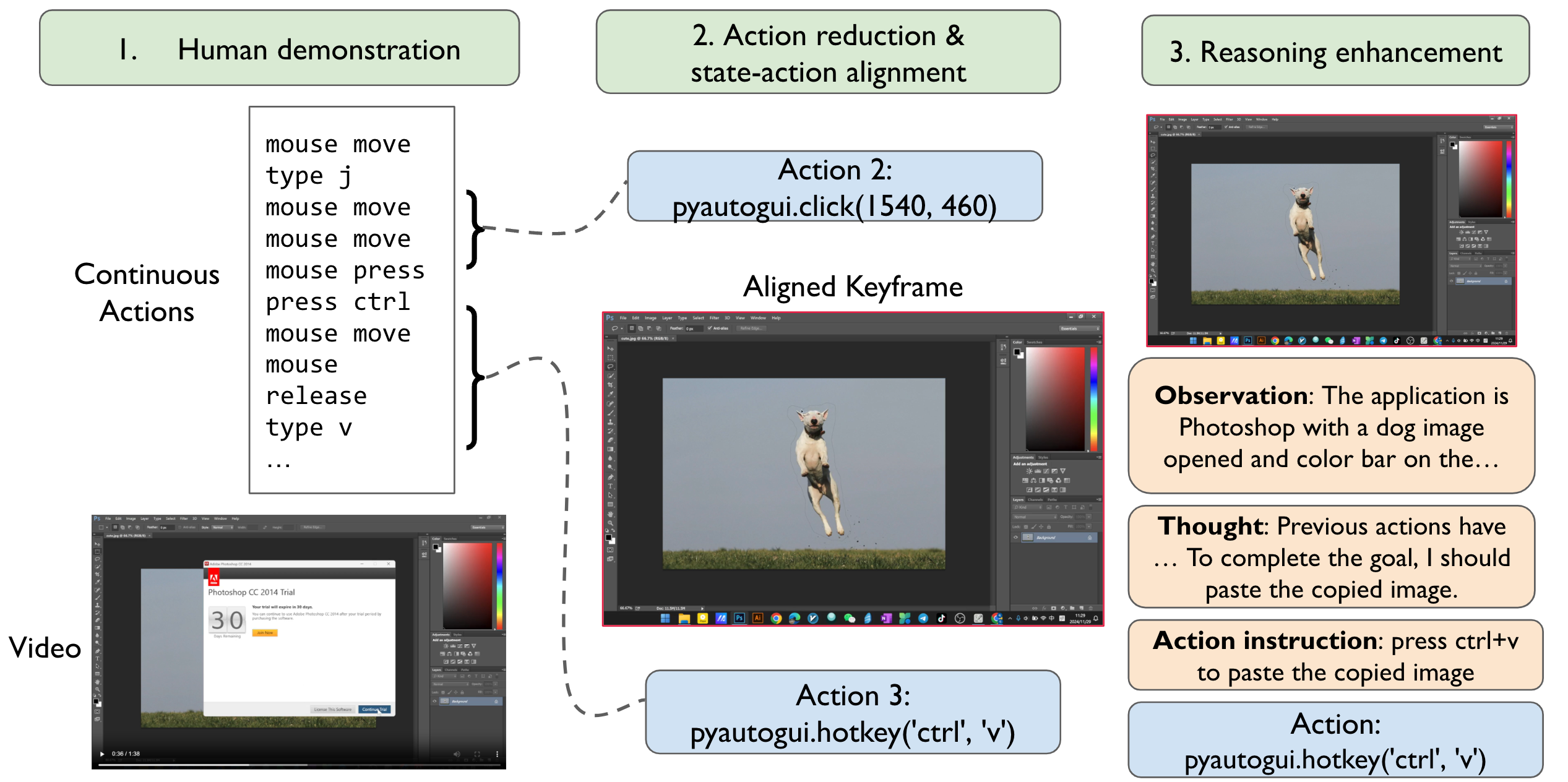

OpenCUA: Open Foundations for

Computer-Use Agents

Xinyuan Wang*, Bowen Wang*, Dunjie Lu*, Junlin Yang*, Tianbao Xie*, Junli Wang*, Jiaqi Deng, Xiaole Guo, Yiheng Xu, Chen Henry Wu, Zhennan Shen, Zhuokai Li, Ryan Li, Xiaochuan Li, Junda Chen, Boyuan Zheng, Peihang Li, Fangyu Lei, Ruisheng Cao, Yeqiao Fu, Dongchan Shin, Martin Shin, Jiarui Hu, Yuyan Wang, Jixuan Chen, Yuxiao Ye, Danyang Zhang, Yipu Wang, Heng Wang, Diyi Yang, Victor Zhong, Y.Charles, Zhilin Yang, Tao Yu NeurIPS 2025 (Spotlight) COLM 2025 Workshop AIA (Oral) project page TL; DR: We present OpenCUA, a comprehensive open-source framework for scaling CUA data and foundation models which includes an annotation infrastructure, the first large-scale computer-use task dataset and a scalable pipeline that transforms demonstrations into state–action pairs with reflective long Chain-of-Thought reasoning. Our end-to-end agent model, OpenCUA-32B achieves an average success rate of 32.5% on OSWorld-Verified, establishing a new state-of-the-art (SOTA) among open-source models and surpassing OpenAI CUA (GPT-4o). |

|

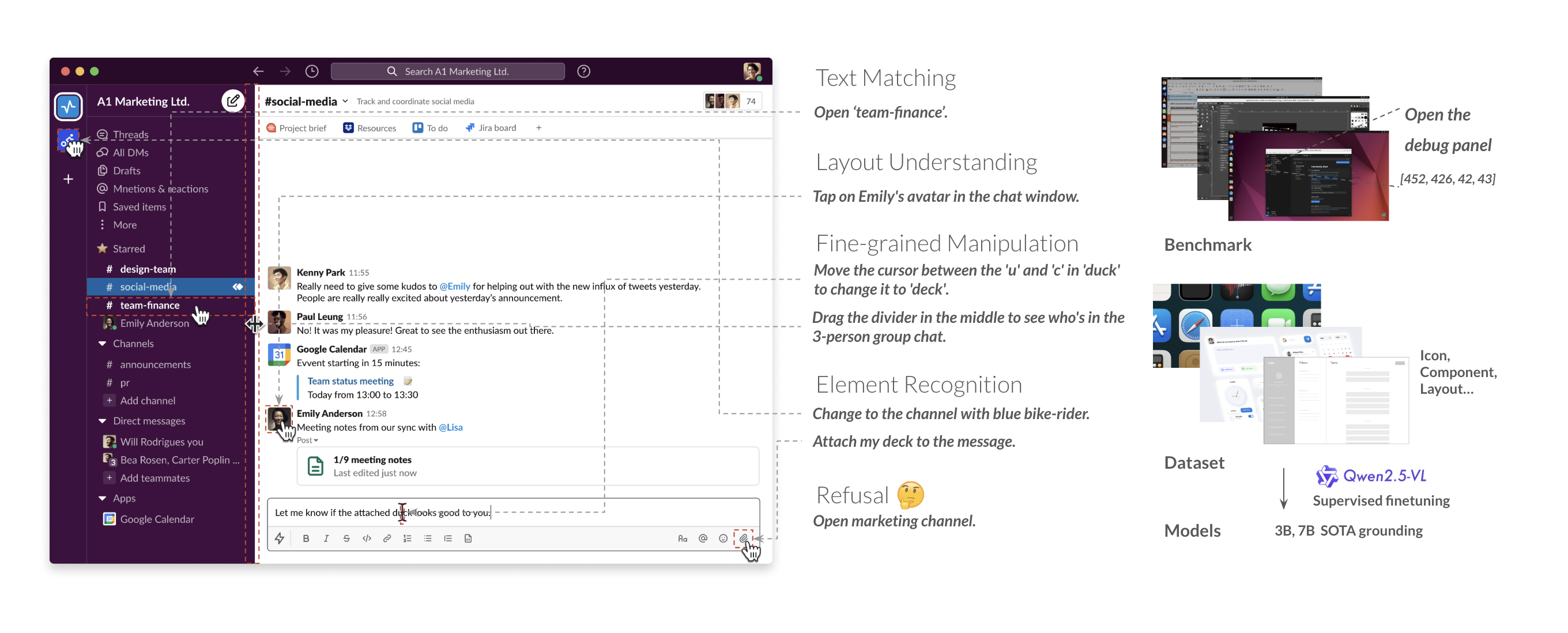

Scaling Computer-Use Grounding

via User Interface Decomposition and Synthesis

Tianbao Xie*, Jiaqi Deng*, Xiaochuan Li*, Junlin Yang*, Haoyuan Wu, Jixuan Chen, Wenjing Hu, Xinyuan Wang, Yuhui Xu, Zekun Wang, Yiheng Xu, Junli Wang, Doyen Sahoo, Tao Yu, Caiming Xiong NeurIPS 2025 D&B Track (Spotlight) project page / arXiv / Code TL; DR: GUI grounding is essential for computer-use agents but current benchmarks oversimplify the task. We introduce OSWorld-G, a detailed benchmark, and Jedi, the largest GUI grounding dataset with 4M examples. Models trained on Jedi outperform existing methods and boost AI agents' task success on OSWorld from 5% to 27%. Our studies show that specialized, diverse data improves generalization to new interfaces. |

Projects |

|

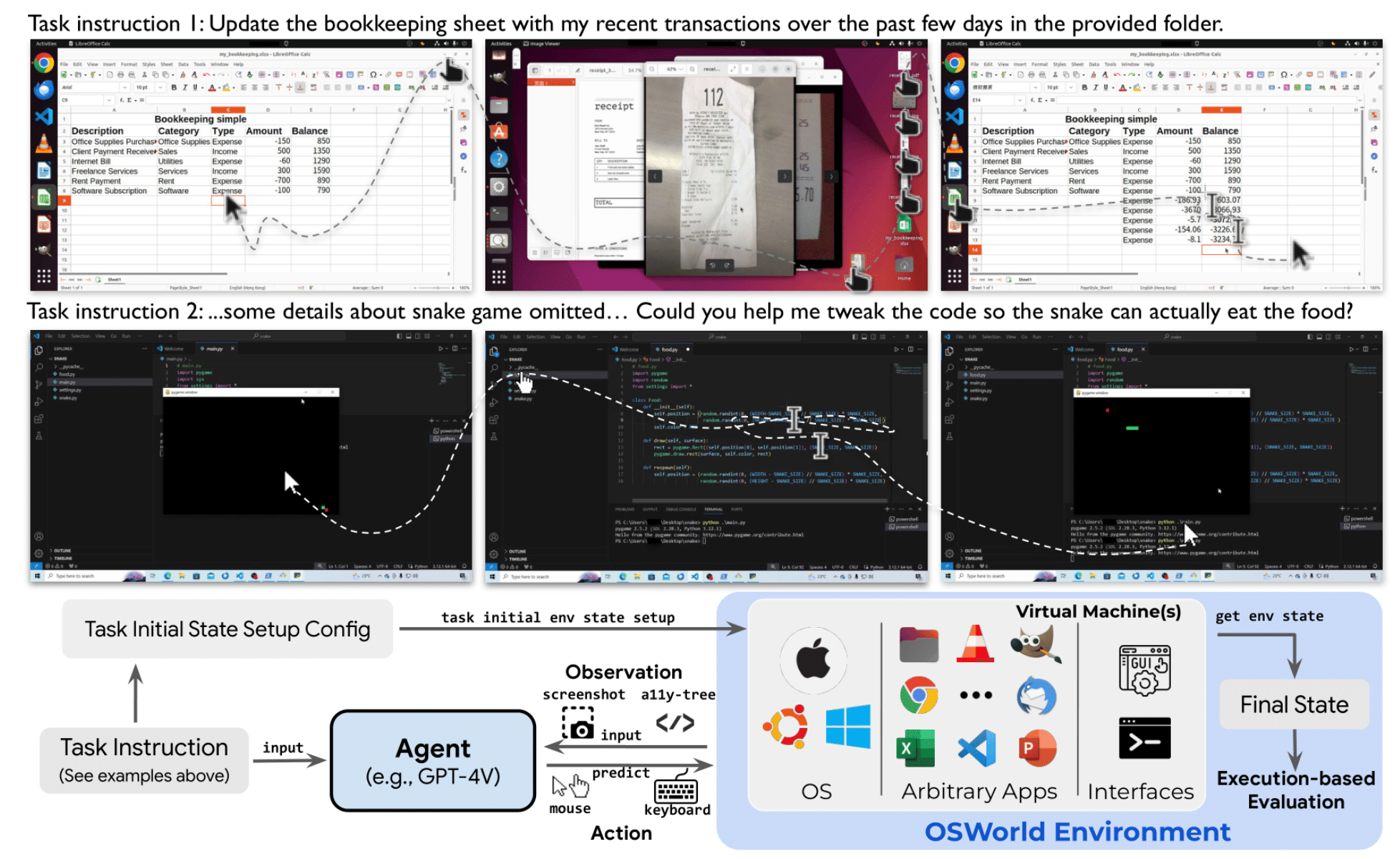

OSWorld: Benchmarking Multimodal

Agents for Open-Ended Tasks in Real Computer

Environments

Code TL; DR: OSWorld is a first-of-its-kind scalable, real computer environment for multimodal agents, supporting task setup, execution-based evaluation, and interactive learning across operating systems. It has been adopted by CUA research teams at OpenAI, Anthropic, Bytedance, etc. It has gained over 2k stars on GitHub. |

Selected Awards and Honors |

|

Languages |

|

Service and Leadership |

|

|

I founded the first alumni mutual-help platform Chi Mu Zhi Jie(尺木之阶) in Shenzhen Experimental High School, my high school alma mater. Our platform aims to bridge the information gap for high school students from diverse economic, familial, and cognitive backgrounds by providing them with comprehensive insights into university academic life. By sharing experiences, offering guidance, and fostering a supportive community, the platform has become a vital resource for students who might otherwise lack access to such information. To date, it has received over 50k reads and attracted more than 2.5k followers. |

Miscellanea |

Senior Mentors |

|

At Tsinghua, I was fortunate to meet some incredibly kind, talented, and supportive seniors, including Yuxuan Li and Zirui Cheng. During my internship at HKU, I was lucky to work closely with Tianbao Xie and Yiheng Xu. I'm also grateful to have collaborated with Xinyuan Wang and Bowen Wang on projects like AgentNet. |

Hobbies |

|

|

|

The source code is inspired by Jon Barron. Thanks for his sharing! 🙏 |